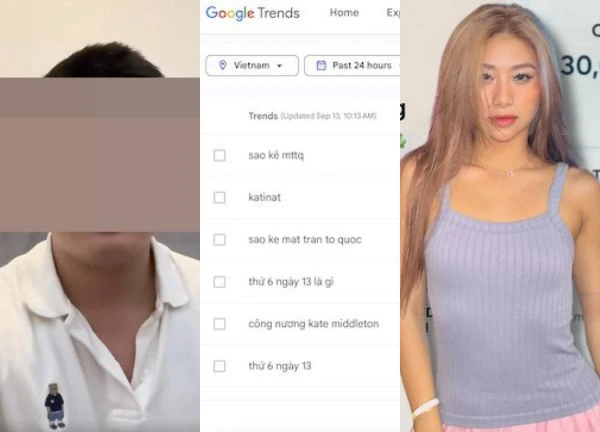

12,000-page statement of the Vietnam Fatherland Front is on "top trend", Google "crashes" because it can't handle it

1 | 1 Discuss | Share

A serious privacy incident when thousands of ChatGPT chats were made public by Google on search results, made users confused and raised big questions about personal data security in the AI era.

In the era of artificial intelligence (AI) explosion, OpenAI 's ChatGPT tool is gradually becoming a popular companion in learning, working, and entertainment. However, recently, a serious privacy-related incident has caused users to question the level of security of personal information when using AI.

Some security experts have just discovered thousands of conversations generated by ChatGPT that can be publicly accessed through the Google search engine. These conversations are not just sample answers or technical instructions, but also contain sensitive information such as personal confessions, work discussions, medical data, and even private confessions.

The reason stems from the " Share chat " feature that OpenAI provides that allows users to share conversations with others through a link. If a user chooses to enable the "Make this chat discoverable" option, those conversations will become public data, which can be indexed by search engines like Google and shown to the public.

However, the interface of this feature is not clear enough, making many users mistakenly think that they are only sharing privately with friends or colleagues without knowing that the content is being widely publicized on the Internet. This inadvertently led to thousands of conversations containing sensitive information being leaked.

Shortly after the incident was discovered, OpenAI suspended the sharing function that allows public search and coordinated with Google to remove exposed links on search results. According to a report from Fast Company , there are more than 4,500 ChatGPT chat links that are shared publicly, which is not a small number in the context of the rapidly increasing number of users of this tool.

This incident reminds users that no matter how modern the technology is, sharing information on digital platforms still has many potential risks, especially when users are not provided with enough transparent information about how data is processed and stored.

Many ChatGPT users use AI to write personal emails, make business plans, describe health conditions, or even share private conversations. A small operation such as turning on sharing mode can cause all information that seems to only be known to you to be unintentionally publicized, raising many questions about the responsibility of AI development companies to protect user data. Sam Altman – CEO of OpenAI – has admitted in interviews that conversations with ChatGPT are not protected by traditional legal regulations on data security.

This poses a major challenge in the context of AI being increasingly widely used in sensitive fields such as healthcare, education or personal counseling, where the lack of clear legal frameworks makes personal data vulnerable to exposure or misuse. In addition, chats, whether they are jokes or experiments, can also be misquoted when made public, seriously affecting users.

This is not the first time security experts have spoken out about the risk of data leaks in large language models like GPT. Studies have shown that the model can re-store some of the data from previous conversations and inadvertently recreate it when a user "asks the right way". The technique, called a "reconstruction attack", shows that AI does not completely erase memories between new sessions, thereby posing a potential security risk. This risk depends on how the model is trained, updated, and controlled, and requires development companies to strengthen stricter protections and monitoring.

The leak of thousands of ChatGPT chats is a strong wake-up call for users and technology developers about the importance of privacy in the digital age. While AI offers many outstanding benefits in supporting content creation, access to knowledge, and automating work, the risk of losing control of personal data is clear.

Users need to raise awareness and be more careful when sharing information on AI platforms, especially sensitive or private content. At the same time, technology development companies must ensure transparency in the process of collecting, storing, and sharing data, as well as quickly fix security vulnerabilities when they are discovered.

ViruSs "lost his argument" and had to use Chat GPT to attack Phao, his argument was completely crushed by netizens.  Thanh Phúc16:02:45 29/03/2025At 8:00 p.m. on March 28, netizens across the country were shocked by ViruSs's livestream on TikTok, speaking up to clarify the scandal with Ngoc Kem, after more than 5 days of silence. The broadcast attracted millions of viewers.

Thanh Phúc16:02:45 29/03/2025At 8:00 p.m. on March 28, netizens across the country were shocked by ViruSs's livestream on TikTok, speaking up to clarify the scandal with Ngoc Kem, after more than 5 days of silence. The broadcast attracted millions of viewers.

1 | 1 Discuss | Share

2 | 1 Discuss | Share

5 | 0 Discuss | Share

4 | 0 Discuss | Share

5 | 0 Discuss | Share

5 | 0 Discuss | Share

4 | 0 Discuss | Share

2 | 0 Discuss | Share

2 | 0 Discuss | Share

3 | 0 Discuss | Share

2 | 0 Discuss | Share

2 | 0 Discuss | Share

3 | 0 Discuss | Report